The second bot doesn’t know any strategies to win tic-tac-toe. This bot will simply try things out and give each move a score once the game ended. If the bot wins, all moves that lead to this situation will get score+1, if he loses, all moves will get score-1. The default score is 0.

So when it is this bots turn, it will decide based on the scores:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

We then have to save the performed moves, so that we later can set the score accordingly:

1 2 3 4 5 6 7 8 9 10 11 12 | |

That’s it! So the learning AI is even simpler than the Minimax bot.

Results

The question is: How good is this bot?

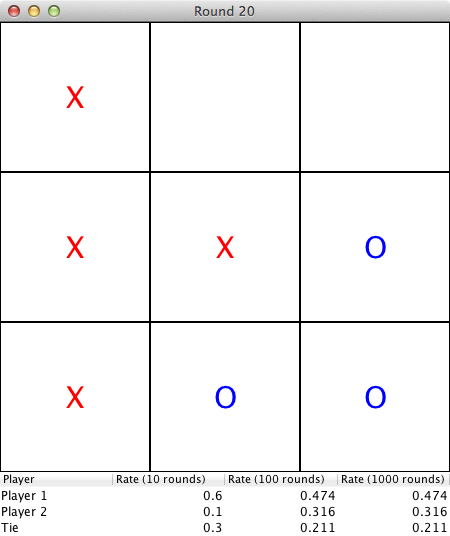

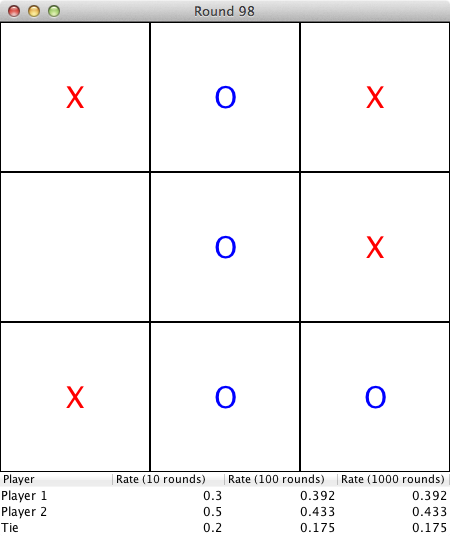

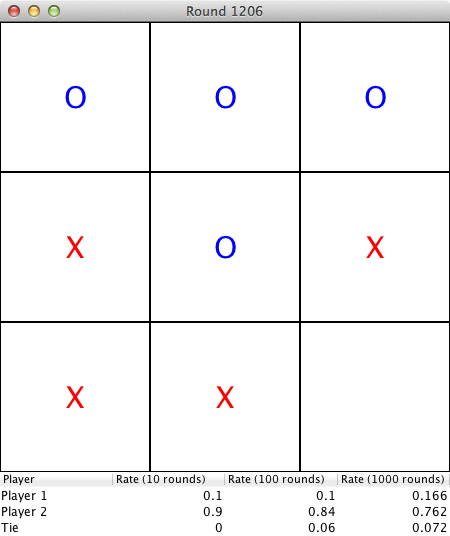

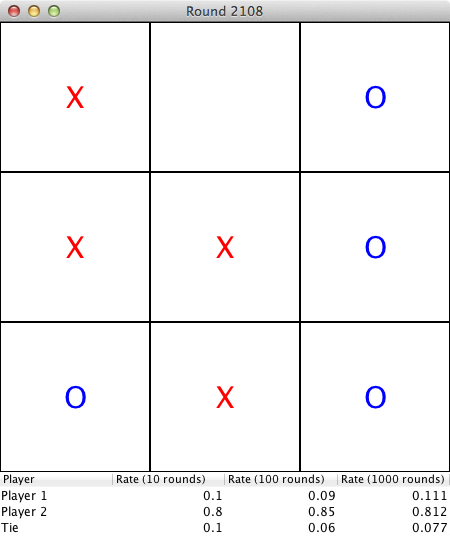

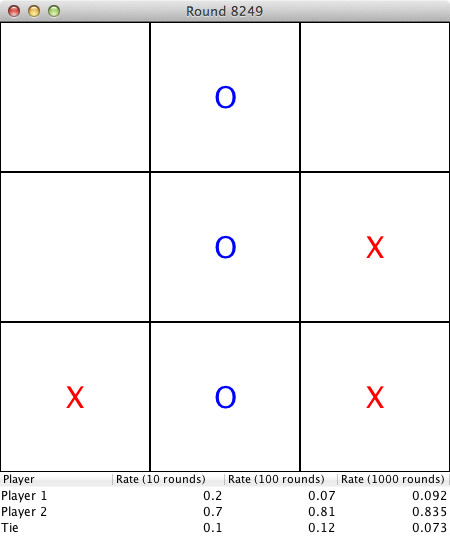

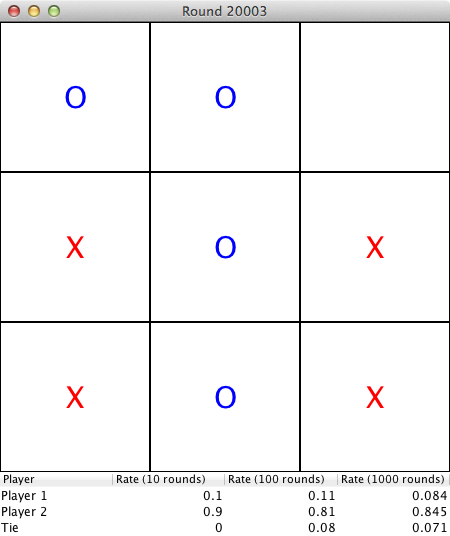

To measure this, I let the bot play against a really stupid bot (chooses always a random move). In the following screenshots: “Player 1” is the stupid bot, “Player 2” is the learning bot.

As you see, the learning bot is indeed smarter than a purely random but (as expected). Playing this 20.000 rounds took 15 minutes. Probably he would get even as good as the simulating bot (which has a winning rate of ~80% against the stupid bot but never loses) if he would be running/learning long enough.

Update 2012-09-16 19:41: After ~3.000 rounds, the learning bot achieved a 100% draw rate against the simulation bot. Yay! :)

Source Code

Once I finished refactoring the code, I will make my repository public on GitHub. In the meantime, you can download a playable version here: Simple Tic-tac-toe (Java 7 required)